Show the code

set.seed(1337)

library("tidymodels")

tidymodels::tidymodels_prefer()

library("plotly")Set seed and load packages.

set.seed(1337)

library("tidymodels")

tidymodels::tidymodels_prefer()

library("plotly")Load data.

mtcars <- mtcars |>

as_tibble()If not tuned properly, models can be overfitted to the training data causing the individual model to simply memorize the training data, but then perform poorly on new data. Regularizing the model is a way to prevent this by penalizing fitting too closely to the training data. The concept is also known as the Bias-Variance trade off further introduced in Chapter 12.

Elastic Net is an extension of Ordinary Least Squares (OLS) which introduces regularizing by a combination of Ridge and Lasso regression. Generally, Ridge is used to penalize large coefficients and Lasso is used to select coefficients. Elastic Net is a compromise between the two. These two methods are explained separately in the next two sections and lastly it is explained how they are combined in Elastic Net.

Recall from the OLS section (Chapter 7) that the goal is to minimize the sum of squared residuals (SSR).

\[ \beta_{OLS} = \min_{\beta} ||y-X\beta||_{2}^{2} \]

Ridge is essentially OLS, except for an added penalty term to the minimization of the SSR:

\[ \beta_{Ridge} = \min_{\beta} ||y-X\beta||_{2}^{2} + \lambda||\beta||_{2}^{2} \]

Finding the coefficients for the linear model now becomes a compromise between minimizing the SSR and minimizing the value of the coefficients. \(\lambda\) is a hyperparameter that controls the trade-off between the two. When \(\lambda = 0\), Ridge regression is the same as OLS. When \(\lambda\) is very large, the coefficients are driven towards zero as the penalty term \(\lambda||\beta||_{2}^{2}\) becomes large compared to the residuals. The coefficients can never be set to zero, but they can be driven asymptotically close to zero. The reason is, when the coefficients are close to zero, the model prefers fitting to the data instead of further reducing the size of the coefficients. See a more detailed explanation in Chapter 7.

Introducing the penalty term makes the fit to the data worse, but it can improve the generalization to new data. The coefficients obtained through Ridge tends to be small compared to OLS, so the model tends to be less sensitive to changes in the data. In machine learning terms, this is called introducing bias (inability to fit data perfectly) to reduce variance (the model fits better to new data). The bias-variance trade-off is a fundamental concept in machine learning.

For visualization, remember the mtcars data set used previously. The following code chunk shows a 3D scatter plot of the weight of the car (wt) vs the horse power (hp) vs the miles per gallon (mpg). The color of the dots represent the horse power. When orienting the plot correctly, it is possible to see a linear correlation between the features.

mtcars |>

plot_ly(x = ~wt,

y = ~hp,

z = ~mpg,

color = ~hp,

type = "scatter3d",

mode = "markers") |>

plotly::layout(

scene = list(

xaxis = list(title = "Weight"),

yaxis = list(title = "Horse Power"),

zaxis = list(title = "Miles per Gallon"),

camera = list(

eye = list(x = 1.5, y = -2, z = 0.5)

)),

showlegend = FALSE)Two coefficients are used to try and model the data. The coefficients are the slope of the line for the weight and horse power. Large absolute values for the coefficients resukts in steep slopes, which in turn suggests that the response (mpg) is sensitive to the features (wt and hp). When presented to new data which is different from the training data, the model predicts wildly different responses due to the sensitivity to changes. The model then generalizes poorly to new data and is said to have high variance. By increasing lambda, the slope is reduced, and the model becomes less sensitive to the features. This is the bias-variance trade-off in action as seen in the following code chunks.

# CV folds object

mtcars_split <- initial_split(mtcars)

mtcars_train <- training(mtcars_split)

mtcars_test <- testing(mtcars_split)

mtcars_folds <- vfold_cv(mtcars_train,

v = 5)

# Setup model specs

ridge_spec <- linear_reg(penalty = tune(),

mixture = 0) |>

set_engine(engine = "glmnet") |>

set_mode("regression")

OLS_spec <- linear_reg() |> # added OLS to have lambda = 0

set_engine(engine = "lm") |>

set_mode("regression")

# Model recipe, no preprocessing

ridge_recipe <- recipe(mpg ~ wt + hp,

data = mtcars_train)

# Combine to worflow

ridge_wflow <- workflow_set(

preproc = list(recipe = ridge_recipe),

models = list(OLS = OLS_spec,

Ridge = ridge_spec)

)

# Change values of hyperparameters to search across

ridge_params <- ridge_spec |>

extract_parameter_set_dials() |>

update(penalty = penalty(c(-1, 1)))

# Add params to workflow

ridge_wflow <-

ridge_wflow |>

option_add(id = "recipe_Ridge",

param_info = ridge_params) |>

option_add(id = "recipe_Ridge",

control = control_grid(extract = function(x) x))

# Run through grid

ridge_grid_results <- ridge_wflow |>

workflow_map(

seed = 1337,

resamples = mtcars_folds,

grid = 5

)The following code chunk shows the results of the grid search. The plot shows the root mean square error (RMSE) for the Ridge regression model against the different lambdas. The RMSE increases as lambda increases suggesting a low lambda is preferred.

autoplot(ridge_grid_results,

id = "recipe_Ridge",

metric = "rmse")

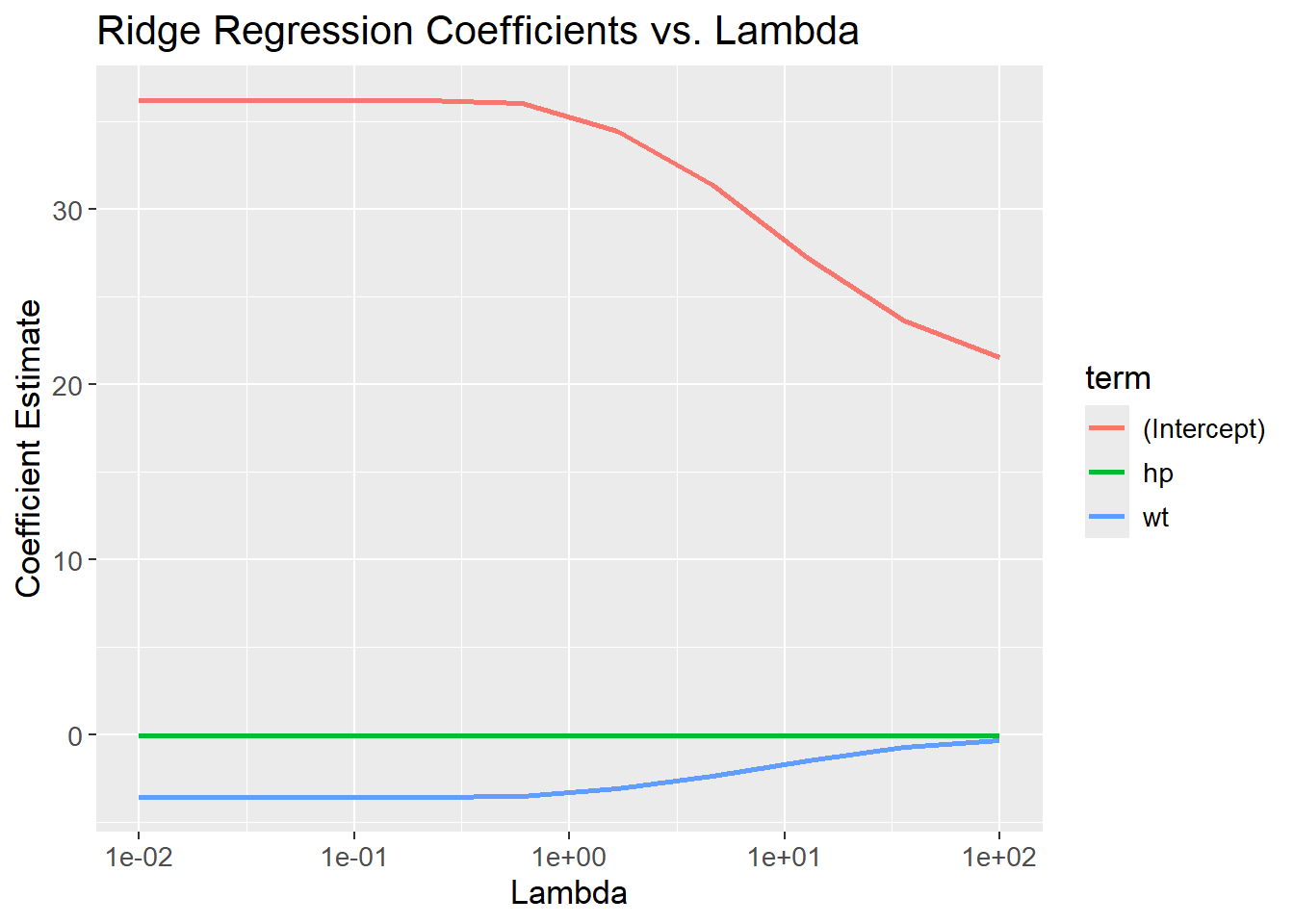

It is also possible to visualize the actual coefficients for the Ridge regression model. In the above, the lambda values were treated as hyperparameters and was optimized. If the goal is to visualize the coefficients for a specific lambda value, each value of lambda needs to be treated as its own model. The following code chunk shows how to do this.

# Define a grid of lambda values

lambda_grid <- 10^seq(-2, 2, length.out = 10)

# Create each function

ridge_models <- lambda_grid |>

map(function(lambda) {

linear_reg(penalty = lambda,

mixture = 0) |>

set_engine("glmnet") |>

fit(mpg ~ wt + hp,

data = mtcars)

})The coefficients are then plotted against the lambda values. The coefficients are reduced as lambda increases. The coefficients are not set to zero, but they are driven towards zero (and might visually appear to be zero).

# Unnest coefficients

ridge_coefficients <- bind_rows(map_dfr(ridge_models, ~tidy(.x))) %>%

mutate(lambda = rep(lambda_grid, each = 3))

# Visualize coefficients

ridge_coefficients |>

ggplot(aes(x = lambda,

y = estimate,

color = term)) +

geom_line(linewidth = 1) +

scale_x_log10() +

labs(title = "Ridge Regression Coefficients vs. Lambda",

x = "Lambda",

y = "Coefficient Estimate") +

theme(text=element_text(size=13))

It is possible plot each of the fitted models on top of the 3D scatterplot to see how the coefficients change with lambda. Due to the limitations of 3D-plotting with plotly, legends for each lambda value are not shown. The lambda values can instead be found be hovering each of the planes in the plot. It is seen that the slopes are reduced as lambda increases.

# Make predictions for each function

ridge_predictions <- ridge_models |>

map2(lambda_grid, function(model, lambda) {

model |>

predict(mtcars) |>

bind_cols(lambda = lambda)

}) |>

bind_rows()

# Bind col predictions to data set

mtcars_train_w_pred <- mtcars |>

bind_cols(ridge_predictions |>

pivot_wider(names_from = "lambda",

values_from = .pred,

names_prefix = "lambda_",

values_fn = list) |>

unnest(everything())) |>

pivot_longer(cols = starts_with("lambda_"),

names_to = "lambda",

values_to = "prediction") |>

mutate(lambda = as.numeric(stringr::str_remove(lambda, "lambda_")),

lambda = round(lambda, 3))

# Create visualization

mtcars_3d_scatter <- mtcars |>

plot_ly(x = ~wt,

y = ~hp,

z = ~mpg,

color = ~hp,

type = "scatter3d",

mode = "markers")

plot_w_predictions <- mtcars |>

plot_ly(

x = ~ wt,

y = ~ hp,

z = ~ mpg,

type = "scatter3d",

mode = "markers"

) |>

plotly::layout(

scene = list(

xaxis = list(title = "Weight"),

yaxis = list(title = "Horse Power"),

zaxis = list(title = "Miles per Gallon"),

camera = list(

eye = list(x = 1.5, y = -2, z = 0.5)

)),

showlegend = FALSE)

for (lambda in lambda_grid) {

mtcars_train_w_pred_filt <- mtcars_train_w_pred |>

filter(lambda == !!round(lambda, 3))

plot_w_predictions <- plot_w_predictions |>

add_trace(data = mtcars_train_w_pred_filt,

x = ~wt,

y = ~hp,

z = ~prediction,

color = ~lambda,

type = "mesh3d",

name = stringr::str_c("Lambda: ", lambda))

}

plot_w_predictionsRecall from Chapter 8 on Logistic Regression, that the algorithm seek to maximize the likelihoods:

\[ \beta_{LR} = \max_{\beta} \; L(\beta,x_i,y_i) \]

Where \(L(\beta,x_i,y_i)\) is the likelihood function mentioned in the chapter, \(\beta\) are the coefficients, ‘y’ are the classes, and ‘x’ are individual probabilities. Similarly to OLS, a penalization term is included when applying Ridge Regression. Since Logistic Regression seek to maximize likelihoods, whereas OLS seek to minimize residuals, the included penalization term is subtracted from the expression:

\[ \beta_{LR, Ridge} = \max_{\beta} \; L(\beta,x_i,y_i) - \lambda||\beta||_{2}^{2} \]

Lasso is similar to Ridge, except the penalty uses the absolute values of the coefficients instead of squaring them. This enables the Lasso Regression to set some coefficients to 0, resulting in a less complex model as it contains fewer features. Again, the \(\lambda\) hyperparameter is used to control the penalization. \(\lambda\) ranges from 0 to \(+\infty\), where higher values of \(\lambda\) gives a more penalized model, i.e. contain fewer features. The Lasso applied to OLS is given by:

\[ \beta_{Lasso} = \min_{\beta} ||y-X\beta||_{2}^{2} + \lambda||\beta||_{1} \]

By removing features, the algorithm can be used for dimensionality reduction. To exemplify, the below code chunk tries to predict mpg given all features using Lasso Regression. The expectation is, that some features contain too little information about the response, and is removed at higher values of \(\lambda\). Since we are not comparing different models, a much simpler approach can be applied:

set.seed(1337)

# Setup model specs

lasso_spec <- linear_reg(penalty = tune(),

mixture = 1) |>

set_engine(engine = "glmnet") |>

set_mode("regression")

# Change values of hyperparameters to search across

lasso_params <- lasso_spec |>

extract_parameter_set_dials() |>

update(penalty = penalty(c(-1, 0.1)))

# Tune the grid and find optimal lambda

lasso_grid_results <- lasso_spec |>

tune_grid(preprocessor = mpg ~ .,

resamples = mtcars_folds,

param_info = lasso_params,

metrics = metric_set(rmse),

grid = 100) |>

select_best(metric = "rmse")

# Update our model with the tuned parameter

lasso_tuned <- lasso_spec |>

finalize_model(lasso_grid_results)

# Fit model to data and get terms

lasso_tuned |>

fit(mpg ~ .,

data = mtcars_train) |>

tidy() |>

filter(estimate != 0)# A tibble: 7 × 3

term estimate penalty

<chr> <dbl> <dbl>

1 (Intercept) 11.9 0.150

2 hp -0.0209 0.150

3 drat 2.24 0.150

4 wt -1.83 0.150

5 qsec 0.501 0.150

6 am 3.23 0.150

7 carb -0.295 0.150As can be seen from the final output, the Lasso Regression has removed some features from the model. Six features remains in the model (and the intercept), whereas the last five are set to zero.

As for Ridge, Lasso can be used with logistic regression as well by subtracting the L1 norm of the coefficients.

\[ \beta_{LR, Lasso} = \max_{\beta} \; L(\beta,x_i,y_i) - \lambda||\beta||_{1} \]

Ridge reduces the size of coefficients making the model better at generalizing to new data (reducing variance), whereas Lasso removes irrelevant coefficients (thereby de-correlating coefficients). Their strengths are combined in Elastic Net as both of the regularization terms are used:

\[ \beta_{ENet} = \min_{\beta} ||y-X\beta||_{2}^{2} + \lambda_{1}||\beta||_{2}^{2} + \lambda_{2}||\beta||_{1} \]

Note that the two terms contain different lambdas. If both are equal to 0, it is the OLS model, if \(\lambda_{1}>0\) and \(\lambda_{2}=0\), it is Ridge and finally if \(\lambda_{1}=0\) and \(\lambda_{2}>0\) it is Lasso. By using different combinations of \(\lambda_{1}>0\) and \(\lambda_{2}>0\), it is possible to regulate how much weight each of the two methods have.

The glmnet package implements a different application of Elastic Net. It still contain a term for Ridge and a term for Lasso, but it introduces a new parameter \(\alpha\) instead of having two \(\lambda\)s. From the documentation, it is seen that the regularization term is:

\[ \lambda\left(\frac{(1-\alpha)||\beta||_{2}^{2}}{2} + \alpha||\beta||_{1}\right) \]

Where \(0 \le \alpha \le 1\). If \(\alpha=0\), the regularization applied is Ridge as \(\alpha||\beta||_{1}=0 \cdot ||\beta||_{1} = 0\) and thereby the Lasso term is ignored and vice versa for \(\alpha=1\). In the tidymodels universe, the \(\alpha\) parameter is called mixture. When looking at the examples of Ridge and Lasso in the above, notice how mixture is either equal to 0 or 1, depending on the use case.

mixture is also a hyperparameter, and also needs to be estimated with CV:

set.seed(1337)

# Setup model specs

enet_spec <- linear_reg(penalty = tune(),

mixture = tune()) |>

set_engine(engine = "glmnet") |>

set_mode("regression")

# Change values of hyperparameters to search across

enet_params <- enet_spec |>

extract_parameter_set_dials() |>

update(penalty = penalty(c(-1, 0)),

mixture = mixture(c(0, 1)))

# Tune the grid and find optimal lambda and mixture

enet_grid_results <- enet_spec |>

tune_grid(preprocessor = mpg ~ .,

resamples = mtcars_folds,

param_info = enet_params,

metrics = metric_set(rmse),

grid = 100

)

enet_grid_results |>

show_best(metric = "rmse",

n = 10)# A tibble: 10 × 8

penalty mixture .metric .estimator mean n std_err .config

<dbl> <dbl> <chr> <chr> <dbl> <int> <dbl> <chr>

1 0.920 0.183 rmse standard 2.47 5 0.373 Preprocessor1_Model019

2 0.824 0.116 rmse standard 2.47 5 0.346 Preprocessor1_Model012

3 0.797 0.179 rmse standard 2.48 5 0.359 Preprocessor1_Model018

4 0.729 0.207 rmse standard 2.49 5 0.358 Preprocessor1_Model021

5 0.857 0.0279 rmse standard 2.50 5 0.306 Preprocessor1_Model003

6 0.693 0.227 rmse standard 2.50 5 0.358 Preprocessor1_Model023

7 0.988 0.358 rmse standard 2.53 5 0.424 Preprocessor1_Model036

8 0.529 0.148 rmse standard 2.54 5 0.314 Preprocessor1_Model015

9 0.481 0.291 rmse standard 2.54 5 0.342 Preprocessor1_Model030

10 0.572 0.103 rmse standard 2.55 5 0.297 Preprocessor1_Model011For 100 different combinations of penalty and mixture, the best combinations have a value for mixture close to 1 suggesting the Lasso term is more important than the Ridge term.

sessioninfo::session_info()─ Session info ───────────────────────────────────────────────────────────────

setting value

version R version 4.3.3 (2024-02-29 ucrt)

os Windows 11 x64 (build 22631)

system x86_64, mingw32

ui RTerm

language (EN)

collate English_United Kingdom.utf8

ctype English_United Kingdom.utf8

tz Europe/Copenhagen

date 2024-05-30

pandoc 3.1.11 @ C:/Program Files/RStudio/resources/app/bin/quarto/bin/tools/ (via rmarkdown)

─ Packages ───────────────────────────────────────────────────────────────────

package * version date (UTC) lib source

backports 1.4.1 2021-12-13 [1] CRAN (R 4.3.1)

broom * 1.0.5 2023-06-09 [1] CRAN (R 4.3.3)

cachem 1.0.8 2023-05-01 [1] CRAN (R 4.3.3)

class 7.3-22 2023-05-03 [2] CRAN (R 4.3.3)

cli 3.6.2 2023-12-11 [1] CRAN (R 4.3.3)

codetools 0.2-19 2023-02-01 [2] CRAN (R 4.3.3)

colorspace 2.1-0 2023-01-23 [1] CRAN (R 4.3.3)

conflicted 1.2.0 2023-02-01 [1] CRAN (R 4.3.3)

crosstalk 1.2.1 2023-11-23 [1] CRAN (R 4.3.3)

data.table 1.15.4 2024-03-30 [1] CRAN (R 4.3.3)

dials * 1.2.1 2024-02-22 [1] CRAN (R 4.3.3)

DiceDesign 1.10 2023-12-07 [1] CRAN (R 4.3.3)

digest 0.6.35 2024-03-11 [1] CRAN (R 4.3.3)

dplyr * 1.1.4 2023-11-17 [1] CRAN (R 4.3.2)

ellipsis 0.3.2 2021-04-29 [1] CRAN (R 4.3.3)

evaluate 0.23 2023-11-01 [1] CRAN (R 4.3.3)

fansi 1.0.6 2023-12-08 [1] CRAN (R 4.3.3)

farver 2.1.1 2022-07-06 [1] CRAN (R 4.3.3)

fastmap 1.1.1 2023-02-24 [1] CRAN (R 4.3.3)

foreach 1.5.2 2022-02-02 [1] CRAN (R 4.3.3)

furrr 0.3.1 2022-08-15 [1] CRAN (R 4.3.3)

future 1.33.2 2024-03-26 [1] CRAN (R 4.3.3)

future.apply 1.11.2 2024-03-28 [1] CRAN (R 4.3.3)

generics 0.1.3 2022-07-05 [1] CRAN (R 4.3.3)

ggplot2 * 3.5.1 2024-04-23 [1] CRAN (R 4.3.3)

glmnet * 4.1-8 2023-08-22 [1] CRAN (R 4.3.3)

globals 0.16.3 2024-03-08 [1] CRAN (R 4.3.3)

glue 1.7.0 2024-01-09 [1] CRAN (R 4.3.3)

gower 1.0.1 2022-12-22 [1] CRAN (R 4.3.1)

GPfit 1.0-8 2019-02-08 [1] CRAN (R 4.3.3)

gtable 0.3.5 2024-04-22 [1] CRAN (R 4.3.3)

hardhat 1.3.1 2024-02-02 [1] CRAN (R 4.3.3)

htmltools 0.5.8.1 2024-04-04 [1] CRAN (R 4.3.3)

htmlwidgets 1.6.4 2023-12-06 [1] CRAN (R 4.3.3)

httr 1.4.7 2023-08-15 [1] CRAN (R 4.3.3)

infer * 1.0.7 2024-03-25 [1] CRAN (R 4.3.3)

ipred 0.9-14 2023-03-09 [1] CRAN (R 4.3.3)

iterators 1.0.14 2022-02-05 [1] CRAN (R 4.3.3)

jsonlite 1.8.8 2023-12-04 [1] CRAN (R 4.3.3)

knitr 1.46 2024-04-06 [1] CRAN (R 4.3.3)

labeling 0.4.3 2023-08-29 [1] CRAN (R 4.3.1)

lattice 0.22-5 2023-10-24 [2] CRAN (R 4.3.3)

lava 1.8.0 2024-03-05 [1] CRAN (R 4.3.3)

lazyeval 0.2.2 2019-03-15 [1] CRAN (R 4.3.3)

lhs 1.1.6 2022-12-17 [1] CRAN (R 4.3.3)

lifecycle 1.0.4 2023-11-07 [1] CRAN (R 4.3.3)

listenv 0.9.1 2024-01-29 [1] CRAN (R 4.3.3)

lubridate 1.9.3 2023-09-27 [1] CRAN (R 4.3.3)

magrittr 2.0.3 2022-03-30 [1] CRAN (R 4.3.3)

MASS 7.3-60.0.1 2024-01-13 [2] CRAN (R 4.3.3)

Matrix * 1.6-5 2024-01-11 [2] CRAN (R 4.3.3)

memoise 2.0.1 2021-11-26 [1] CRAN (R 4.3.3)

modeldata * 1.3.0 2024-01-21 [1] CRAN (R 4.3.3)

modelenv 0.1.1 2023-03-08 [1] CRAN (R 4.3.3)

munsell 0.5.1 2024-04-01 [1] CRAN (R 4.3.3)

nnet 7.3-19 2023-05-03 [2] CRAN (R 4.3.3)

parallelly 1.37.1 2024-02-29 [1] CRAN (R 4.3.3)

parsnip * 1.2.1 2024-03-22 [1] CRAN (R 4.3.3)

pillar 1.9.0 2023-03-22 [1] CRAN (R 4.3.3)

pkgconfig 2.0.3 2019-09-22 [1] CRAN (R 4.3.3)

plotly * 4.10.4 2024-01-13 [1] CRAN (R 4.3.3)

prodlim 2023.08.28 2023-08-28 [1] CRAN (R 4.3.3)

purrr * 1.0.2 2023-08-10 [1] CRAN (R 4.3.3)

R6 2.5.1 2021-08-19 [1] CRAN (R 4.3.3)

Rcpp 1.0.12 2024-01-09 [1] CRAN (R 4.3.3)

recipes * 1.0.10 2024-02-18 [1] CRAN (R 4.3.3)

rlang 1.1.3 2024-01-10 [1] CRAN (R 4.3.3)

rmarkdown 2.26 2024-03-05 [1] CRAN (R 4.3.3)

rpart 4.1.23 2023-12-05 [2] CRAN (R 4.3.3)

rsample * 1.2.1 2024-03-25 [1] CRAN (R 4.3.3)

rstudioapi 0.16.0 2024-03-24 [1] CRAN (R 4.3.3)

scales * 1.3.0 2023-11-28 [1] CRAN (R 4.3.3)

sessioninfo 1.2.2 2021-12-06 [1] CRAN (R 4.3.3)

shape 1.4.6.1 2024-02-23 [1] CRAN (R 4.3.2)

stringi 1.8.3 2023-12-11 [1] CRAN (R 4.3.2)

stringr 1.5.1 2023-11-14 [1] CRAN (R 4.3.3)

survival 3.5-8 2024-02-14 [2] CRAN (R 4.3.3)

tibble * 3.2.1 2023-03-20 [1] CRAN (R 4.3.3)

tidymodels * 1.2.0 2024-03-25 [1] CRAN (R 4.3.3)

tidyr * 1.3.1 2024-01-24 [1] CRAN (R 4.3.3)

tidyselect 1.2.1 2024-03-11 [1] CRAN (R 4.3.3)

timechange 0.3.0 2024-01-18 [1] CRAN (R 4.3.3)

timeDate 4032.109 2023-12-14 [1] CRAN (R 4.3.2)

tune * 1.2.1 2024-04-18 [1] CRAN (R 4.3.3)

utf8 1.2.4 2023-10-22 [1] CRAN (R 4.3.3)

vctrs 0.6.5 2023-12-01 [1] CRAN (R 4.3.3)

viridisLite 0.4.2 2023-05-02 [1] CRAN (R 4.3.3)

withr 3.0.0 2024-01-16 [1] CRAN (R 4.3.3)

workflows * 1.1.4 2024-02-19 [1] CRAN (R 4.3.3)

workflowsets * 1.1.0 2024-03-21 [1] CRAN (R 4.3.3)

xfun 0.43 2024-03-25 [1] CRAN (R 4.3.3)

yaml 2.3.8 2023-12-11 [1] CRAN (R 4.3.2)

yardstick * 1.3.1 2024-03-21 [1] CRAN (R 4.3.3)

[1] C:/Users/Willi/AppData/Local/R/win-library/4.3

[2] C:/Program Files/R/R-4.3.3/library

──────────────────────────────────────────────────────────────────────────────