Show the code

set.seed(1337)

library("tidymodels")

tidymodels::tidymodels_prefer()

library("MASS")Set seed and load packages.

set.seed(1337)

library("tidymodels")

tidymodels::tidymodels_prefer()

library("MASS")Load data.

data("iris")

iris <- iris |>

tibble::as_tibble() |>

filter(Species != "setosa") |>

droplevels()Whereas the problem introduces in the beginning of Chapter 7 was to predict a continuous value, the problem in this chapter is to predict a binary value, otherwise the problem is the same. Examples include predicting if a patient has a disease or not, if a customer will buy a product or not or if a flower is a certain species or not.

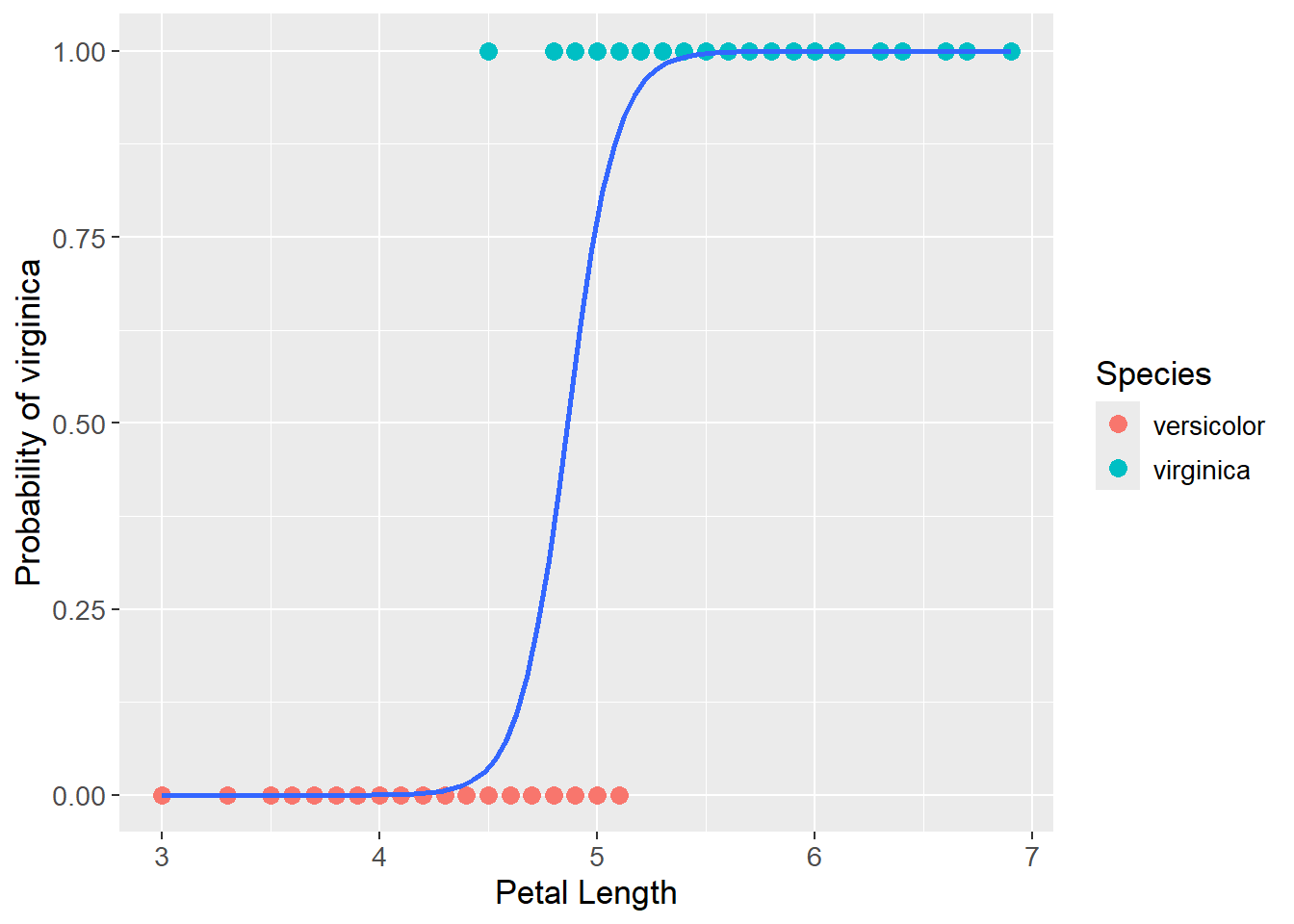

Opposite Ordinary Least Squares (OLS), which predicts a continuous value, logistic regression predicts a probability between two classes (usually referred to as a TRUE/FALSE). This is done by fitting a logistic function, also called a sigmoid function, which has the form \(p = \frac{1}{1 + e^{-y}}\) and have a characteristic S-shape. When plotting these probabilites, the y-axis ranges from 0 to 1 reflecting the probability of the class measured along the y-axis.

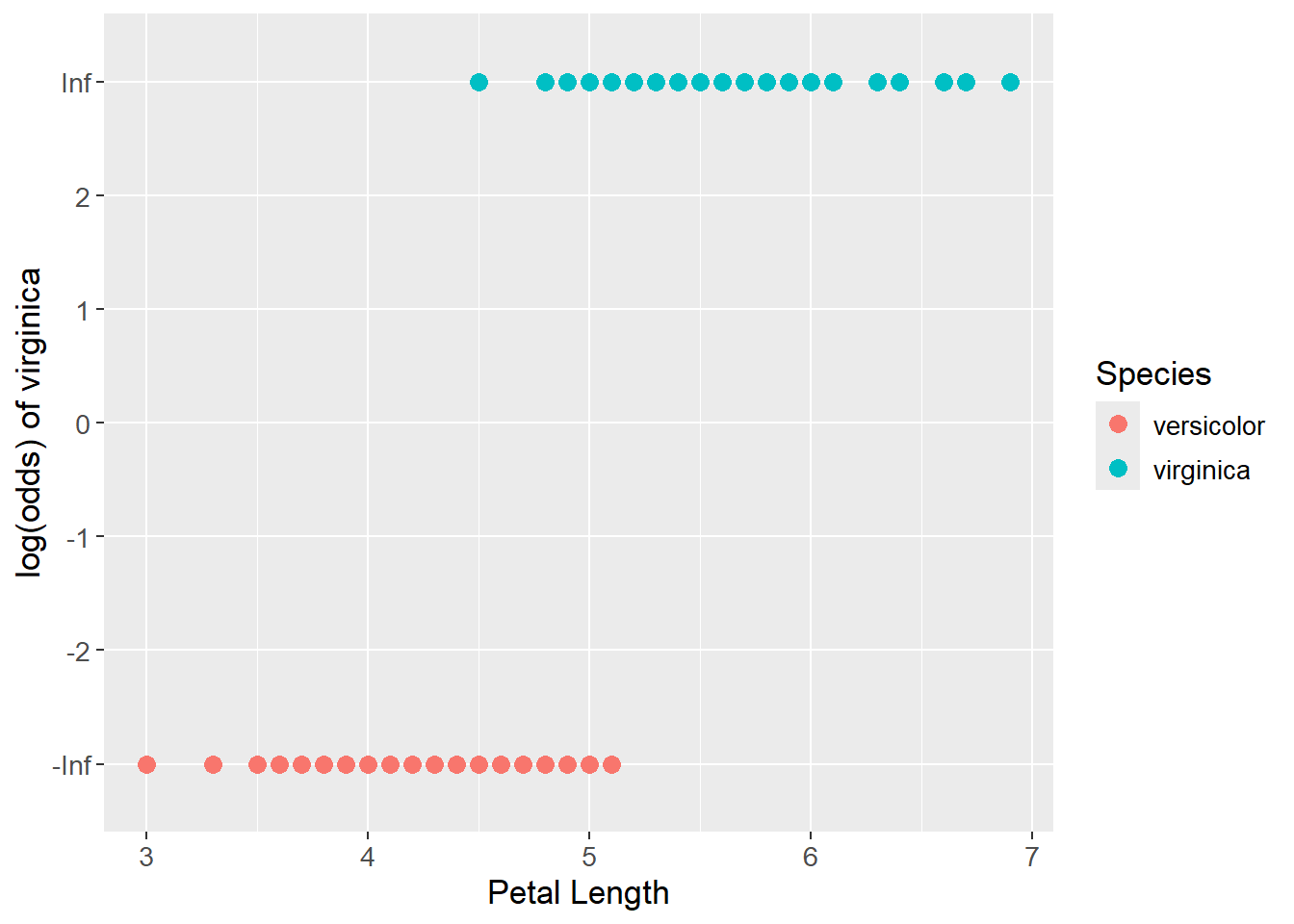

When applying logistic regression, the probabilites are transformed into a continuous scale with the logit function which has the form \(log(odds) = log(\frac{p}{1-p})\). The logit function converts the probabilities into log-odds. For example, a probability of 0.5 is 0 log-odds, the center on both y-axes. A probability of 0.75 is 1.099 log-odds and so forth. The points that were classified as TRUE, i.e. 1 on the y-axis, is transformed to positive infinity on the new y-axis as:

\[ log(odds) = log(\frac{p}{1-p}) = log(\frac{1}{1-1}) = log(\frac{1}{0}) = log(1) - log(0) = \infty \]

The points that were classified as FALSE, i.e. 0 on the previous y-axis, is transformed to negative infinity on the new y-axis as:

\[ log(odds) = log(\frac{p}{1-p}) = log(\frac{0}{1-0}) = log(\frac{0}{1}) = log(0) - log(1) = -\infty \]

So, the interval of 0.5 to 1 on the old axis as been transformed into an interval from 0 to positive infinity and vice versa. The data now exists on a continuous scale from negative to positive infinity, and a straight line can be fitted to the data.

For finding the coefficients for the fitted line, logistic regression cannot apply sum of squared residuals as OLS did, as the distance between a point and a candidate line tends to be infinity given the transformation mentioned above. Instead, maximum likelihood is used to find the best fit line. The term refers to maximizing the likelihood of observing the data given the model. The likelihood is the product of the probabilities of the data points, so a good fit maximizes the product of the probabilities.

After finding a candidate line on the log-odds scale (the continuous scale), the data points are projected onto the line. The log-odds values are read off the y-axis and converted back into probabilities with the logistic function. The product of probabilities, the likelihood, is calculated where the product is found differently for the TRUE and FALSE classes:

\[ L(\beta,x_i,y_i) = \prod_{i \; in \; y = 1}^{n} x_i \cdot \prod_{i \; in \; y = 0}^{n} (1-x_i) \]

Where n is the number of observations, y is the class, and x is individual probabilities. It is the above product that is maximized by trying different lines on the log-odds scale. The line that maximizes the product is the best fit line which can be written as:

\[ \beta_{LR} = \max_{\beta} \; L(\beta,x_i,y_i) \]

The process results in the best set of coefficients for the linear line that maximizes the product of probabilities, i.e. maximum likelihood. It is possible to convert the linearly fit line back to the original scale with the logistic function by replacing y with the linear equation:

\[ p = \frac{1}{1 + e^{-y}} = \frac{1}{1 + e^{-(\beta_0 + \beta_1 \cdot x_1+ \beta_2 \cdot x_2 + ... + \beta_n \cdot x_n)}} \]

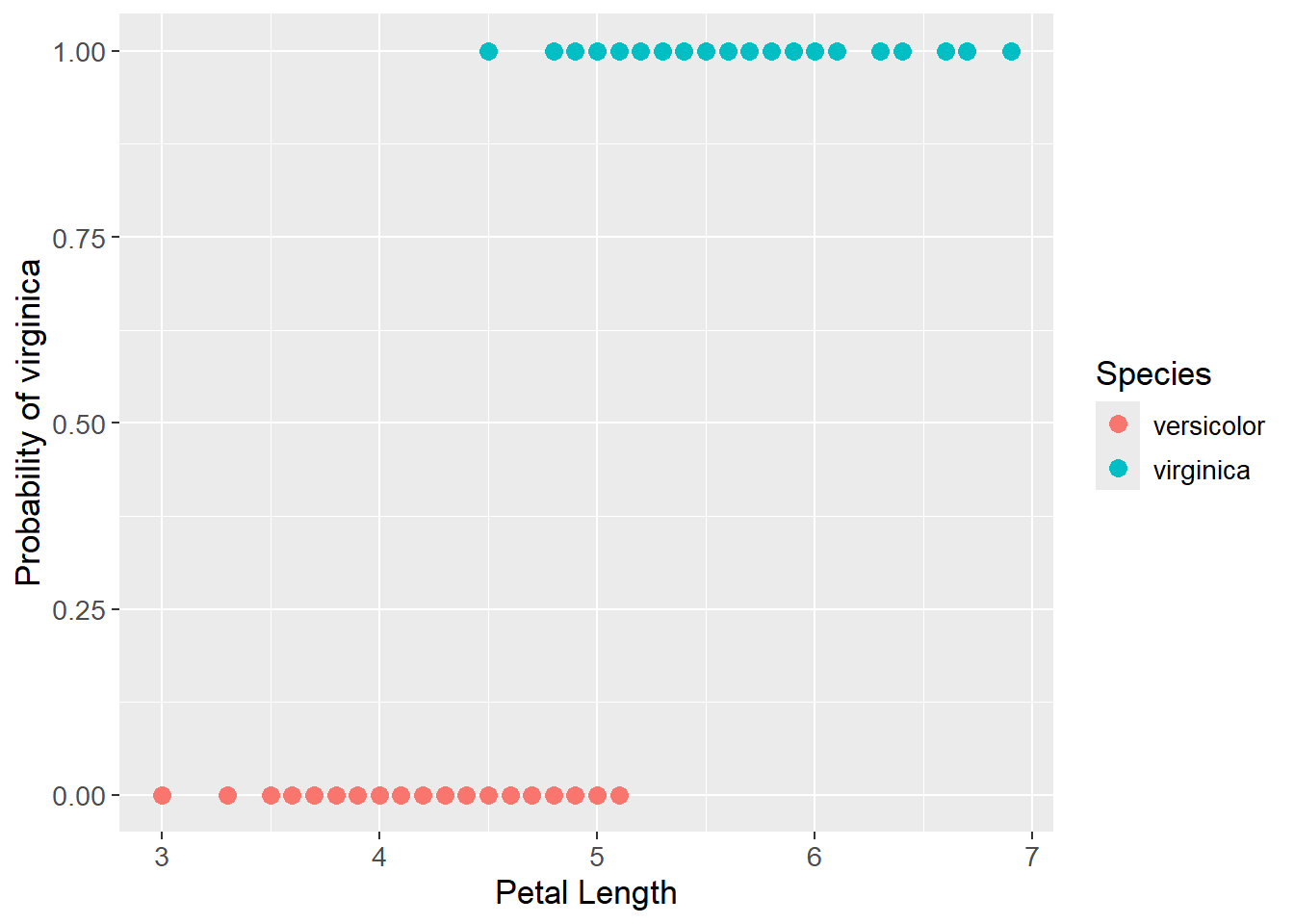

For illustrating logistic regression, the iris data set is used to predict the probability of a flower being a virginica based on the petal length. As previously, the setosa class have been removed from the data set to only contain two classes. The species is transformed into a binary response, where versicolor is 0 and virginica is 1. Below the data is visualized where the petal length is seen on the x-axis and the probability of being a virginica is seen on the y-axis.

iris_prob <- iris |>

mutate(Species_prob = case_when(Species == "versicolor" ~ 0,

Species == "virginica" ~ 1))

iris_prob_plot <- iris_prob |>

ggplot(aes(x = Petal.Length,

y = Species_prob)) +

geom_point(aes(col = Species),

size = 3) +

labs(x = "Petal Length",

y = "Probability of virginica") +

theme(text=element_text(size=13))

iris_prob_plot

The probabilities are then transformed to log(odds) with the logit function. In this data set, all values were either 1 or 0, which is why the log(odds) values are either positive or negative infinity as seen below:

iris_logit_plot <- iris_prob |>

mutate(Species_logit = log(Species_prob / (1 - Species_prob))) |>

mutate(Species_logit = case_when(Species_logit == Inf ~ "Inf",

Species_logit == -Inf ~ "-Inf",

TRUE ~ as.character(Species_logit)

)) |>

ggplot(aes(x = Petal.Length,

y = Species_logit)) +

geom_point(aes(col = Species),

size = 3) +

scale_y_discrete(limits = c("-Inf", "-2", "-1", "0", "1", "2", "Inf")) +

labs(x = "Petal Length",

y = "log(odds) of virginica") +

theme(text=element_text(size=13))

iris_logit_plot

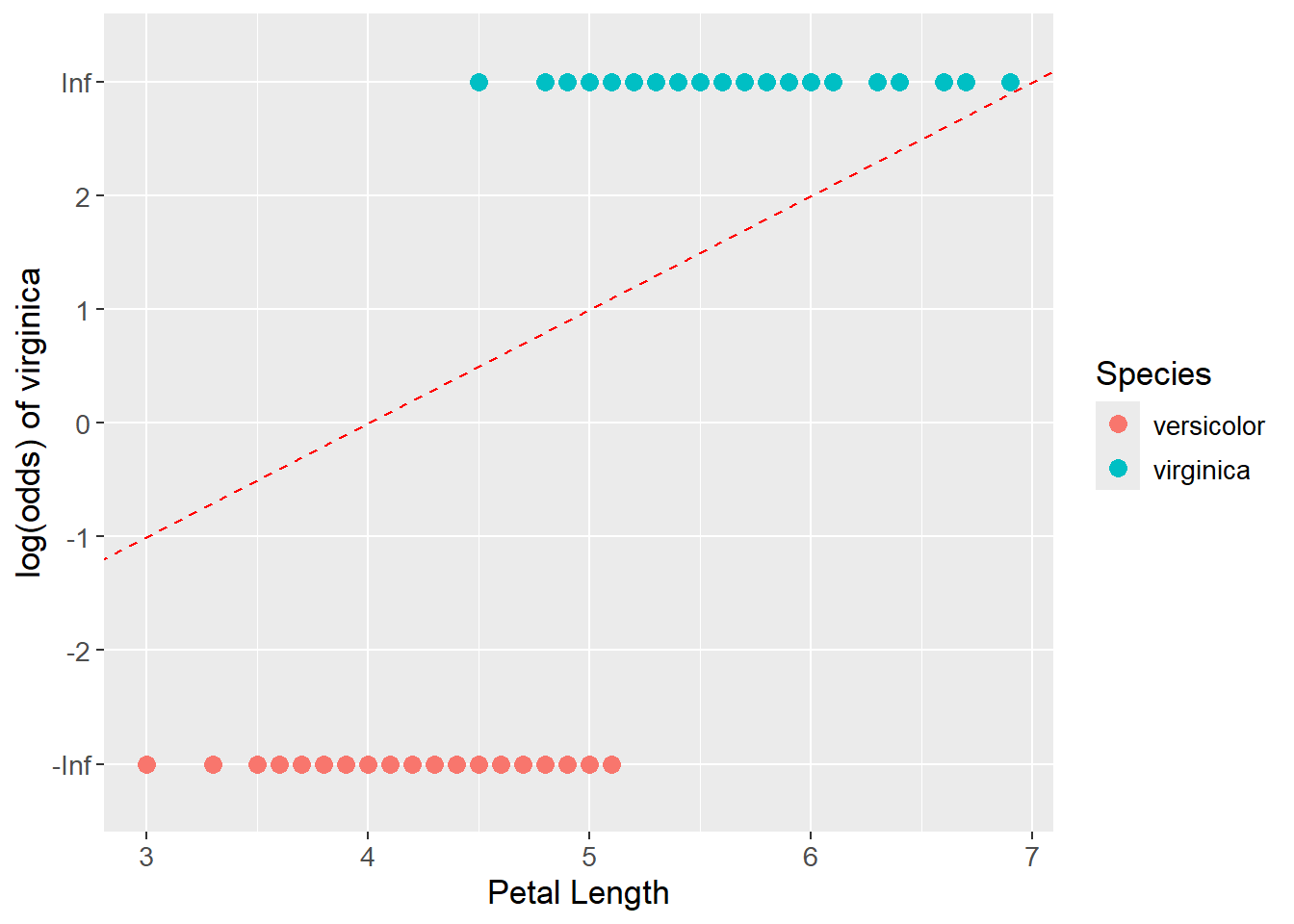

As a candidate fit, see the red dashed lined below. The data points are projected onto the line and the log(odds) values are read off the y-axis. The log(odds) values are then converted back into probabilities with the logistic function and the product for the TRUE and FALSE classes are found. The product of the two classes is then calculated. The candidate line that maximizes the product is the best fit line.

iris_logit_plot +

geom_abline(intercept = 0,

slope = 1,

linetype = "dashed",

color = "red")

Ultimately, the optimal sigmoid shaped line is found:

iris_prob_plot +

geom_smooth(method = "glm",

formula = y ~ x,

method.args = list(family = "binomial"),

se = FALSE)

sessioninfo::session_info()─ Session info ───────────────────────────────────────────────────────────────

setting value

version R version 4.3.3 (2024-02-29 ucrt)

os Windows 11 x64 (build 22631)

system x86_64, mingw32

ui RTerm

language (EN)

collate English_United Kingdom.utf8

ctype English_United Kingdom.utf8

tz Europe/Copenhagen

date 2024-05-30

pandoc 3.1.11 @ C:/Program Files/RStudio/resources/app/bin/quarto/bin/tools/ (via rmarkdown)

─ Packages ───────────────────────────────────────────────────────────────────

package * version date (UTC) lib source

backports 1.4.1 2021-12-13 [1] CRAN (R 4.3.1)

broom * 1.0.5 2023-06-09 [1] CRAN (R 4.3.3)

cachem 1.0.8 2023-05-01 [1] CRAN (R 4.3.3)

class 7.3-22 2023-05-03 [2] CRAN (R 4.3.3)

cli 3.6.2 2023-12-11 [1] CRAN (R 4.3.3)

codetools 0.2-19 2023-02-01 [2] CRAN (R 4.3.3)

colorspace 2.1-0 2023-01-23 [1] CRAN (R 4.3.3)

conflicted 1.2.0 2023-02-01 [1] CRAN (R 4.3.3)

data.table 1.15.4 2024-03-30 [1] CRAN (R 4.3.3)

dials * 1.2.1 2024-02-22 [1] CRAN (R 4.3.3)

DiceDesign 1.10 2023-12-07 [1] CRAN (R 4.3.3)

digest 0.6.35 2024-03-11 [1] CRAN (R 4.3.3)

dplyr * 1.1.4 2023-11-17 [1] CRAN (R 4.3.2)

evaluate 0.23 2023-11-01 [1] CRAN (R 4.3.3)

fansi 1.0.6 2023-12-08 [1] CRAN (R 4.3.3)

farver 2.1.1 2022-07-06 [1] CRAN (R 4.3.3)

fastmap 1.1.1 2023-02-24 [1] CRAN (R 4.3.3)

foreach 1.5.2 2022-02-02 [1] CRAN (R 4.3.3)

furrr 0.3.1 2022-08-15 [1] CRAN (R 4.3.3)

future 1.33.2 2024-03-26 [1] CRAN (R 4.3.3)

future.apply 1.11.2 2024-03-28 [1] CRAN (R 4.3.3)

generics 0.1.3 2022-07-05 [1] CRAN (R 4.3.3)

ggplot2 * 3.5.1 2024-04-23 [1] CRAN (R 4.3.3)

globals 0.16.3 2024-03-08 [1] CRAN (R 4.3.3)

glue 1.7.0 2024-01-09 [1] CRAN (R 4.3.3)

gower 1.0.1 2022-12-22 [1] CRAN (R 4.3.1)

GPfit 1.0-8 2019-02-08 [1] CRAN (R 4.3.3)

gtable 0.3.5 2024-04-22 [1] CRAN (R 4.3.3)

hardhat 1.3.1 2024-02-02 [1] CRAN (R 4.3.3)

htmltools 0.5.8.1 2024-04-04 [1] CRAN (R 4.3.3)

htmlwidgets 1.6.4 2023-12-06 [1] CRAN (R 4.3.3)

infer * 1.0.7 2024-03-25 [1] CRAN (R 4.3.3)

ipred 0.9-14 2023-03-09 [1] CRAN (R 4.3.3)

iterators 1.0.14 2022-02-05 [1] CRAN (R 4.3.3)

jsonlite 1.8.8 2023-12-04 [1] CRAN (R 4.3.3)

knitr 1.46 2024-04-06 [1] CRAN (R 4.3.3)

labeling 0.4.3 2023-08-29 [1] CRAN (R 4.3.1)

lattice 0.22-5 2023-10-24 [2] CRAN (R 4.3.3)

lava 1.8.0 2024-03-05 [1] CRAN (R 4.3.3)

lhs 1.1.6 2022-12-17 [1] CRAN (R 4.3.3)

lifecycle 1.0.4 2023-11-07 [1] CRAN (R 4.3.3)

listenv 0.9.1 2024-01-29 [1] CRAN (R 4.3.3)

lubridate 1.9.3 2023-09-27 [1] CRAN (R 4.3.3)

magrittr 2.0.3 2022-03-30 [1] CRAN (R 4.3.3)

MASS * 7.3-60.0.1 2024-01-13 [2] CRAN (R 4.3.3)

Matrix 1.6-5 2024-01-11 [2] CRAN (R 4.3.3)

memoise 2.0.1 2021-11-26 [1] CRAN (R 4.3.3)

mgcv 1.9-1 2023-12-21 [2] CRAN (R 4.3.3)

modeldata * 1.3.0 2024-01-21 [1] CRAN (R 4.3.3)

munsell 0.5.1 2024-04-01 [1] CRAN (R 4.3.3)

nlme 3.1-164 2023-11-27 [2] CRAN (R 4.3.3)

nnet 7.3-19 2023-05-03 [2] CRAN (R 4.3.3)

parallelly 1.37.1 2024-02-29 [1] CRAN (R 4.3.3)

parsnip * 1.2.1 2024-03-22 [1] CRAN (R 4.3.3)

pillar 1.9.0 2023-03-22 [1] CRAN (R 4.3.3)

pkgconfig 2.0.3 2019-09-22 [1] CRAN (R 4.3.3)

prodlim 2023.08.28 2023-08-28 [1] CRAN (R 4.3.3)

purrr * 1.0.2 2023-08-10 [1] CRAN (R 4.3.3)

R6 2.5.1 2021-08-19 [1] CRAN (R 4.3.3)

Rcpp 1.0.12 2024-01-09 [1] CRAN (R 4.3.3)

recipes * 1.0.10 2024-02-18 [1] CRAN (R 4.3.3)

rlang 1.1.3 2024-01-10 [1] CRAN (R 4.3.3)

rmarkdown 2.26 2024-03-05 [1] CRAN (R 4.3.3)

rpart 4.1.23 2023-12-05 [2] CRAN (R 4.3.3)

rsample * 1.2.1 2024-03-25 [1] CRAN (R 4.3.3)

rstudioapi 0.16.0 2024-03-24 [1] CRAN (R 4.3.3)

scales * 1.3.0 2023-11-28 [1] CRAN (R 4.3.3)

sessioninfo 1.2.2 2021-12-06 [1] CRAN (R 4.3.3)

survival 3.5-8 2024-02-14 [2] CRAN (R 4.3.3)

tibble * 3.2.1 2023-03-20 [1] CRAN (R 4.3.3)

tidymodels * 1.2.0 2024-03-25 [1] CRAN (R 4.3.3)

tidyr * 1.3.1 2024-01-24 [1] CRAN (R 4.3.3)

tidyselect 1.2.1 2024-03-11 [1] CRAN (R 4.3.3)

timechange 0.3.0 2024-01-18 [1] CRAN (R 4.3.3)

timeDate 4032.109 2023-12-14 [1] CRAN (R 4.3.2)

tune * 1.2.1 2024-04-18 [1] CRAN (R 4.3.3)

utf8 1.2.4 2023-10-22 [1] CRAN (R 4.3.3)

vctrs 0.6.5 2023-12-01 [1] CRAN (R 4.3.3)

withr 3.0.0 2024-01-16 [1] CRAN (R 4.3.3)

workflows * 1.1.4 2024-02-19 [1] CRAN (R 4.3.3)

workflowsets * 1.1.0 2024-03-21 [1] CRAN (R 4.3.3)

xfun 0.43 2024-03-25 [1] CRAN (R 4.3.3)

yaml 2.3.8 2023-12-11 [1] CRAN (R 4.3.2)

yardstick * 1.3.1 2024-03-21 [1] CRAN (R 4.3.3)

[1] C:/Users/Willi/AppData/Local/R/win-library/4.3

[2] C:/Program Files/R/R-4.3.3/library

──────────────────────────────────────────────────────────────────────────────